Welcome to the Q3 2025 Edition of the AI in Gaming Industry Report.

AI captured 53% of global VC funding in H1 2025, yet only 5% of pilot AI projects actually reach production and generate real benefits. This gap reveals everything you need to know about the current state of AI adoption.

While headlines promise revolution, the actual transformation is happening in very specific, often boring parts, especially in game development. Some tools have crossed into production-ready territory. Most haven’t. So let's look at AI applications that are currently effective, and what the next year will realistically bring.

With twenty percent of Steam games now disclosing AI usage, here’s what happened over the summer.

In The News

90% of developers now use AI in workflows, per Google Cloud

At Devcom 2025, Google announced that 90% of game developers use some form of AI, and 97% believe it’s transforming the industry. Applications range from automating repetitive tasks to accelerating playtesting and designing dynamic levels. The gap between “use some form of AI” and “successfully implemented AI” remains wide.

NetEase posts record Q2 revenue with heavy AI integration

NetEase’s Q2 results were driven by titles like MARVEL Mystic Mayhem and FragPunk, with AI used for development via their LLM Confucius and ad optimization. The company sees AI-designed games as a core strategy. It’s one of the first major publishers to publicly tie financial performance directly to AI implementation.

Steam: Nearly 1 in 5 new games use generative AI

Steam disclosed approximately 20% of 2025 releases used generative AI for content or character generation, an exponential increase from earlier in the year. Actual usage may be higher, as not all developers disclose their tools.

Unity beats Q2 expectations with AI-powered ad platform

Unity’s Q2 performance exceeded expectations largely due to its AI-powered advertising platform. The platform uses AI for targeting optimization and creative generation, showing that some of the clearest AI wins come from adjacent services rather than core production.

Inworld AI launches first AI runtime for consumer applications

The first AI runtime engineered to scale consumer applications from 10 to 10M users with minimal code changes. The platform automates AI operations and addresses the critical gap where most projects stall between prototype and production. Early results: Wishroll scaled to 1 million users in 19 days with 95% cost reduction, while Bible Chat reduced voice costs by 85%. The company has raised over $120M and works with Xbox, Disney, NVIDIA, and NBCUniversal. Pricing is usage-based with no upfront costs.

Ludus AI launches automated Blueprint generation for Unreal Engine

Open beta began in August 2025 with the first system to automatically generate Blueprints in Unreal Engine, reducing creation time from days to minutes.

The platform has hit 10,000 generated 3D models and analyzed 10,000 Blueprints across more than 20,000 users, positioning itself as a comprehensive Unreal Engine assistant. It handles Blueprint creation and analysis, 3D model generation, code writing and debugging, and project documentation. The tool offers self-hosted deployment for complete privacy and data control.

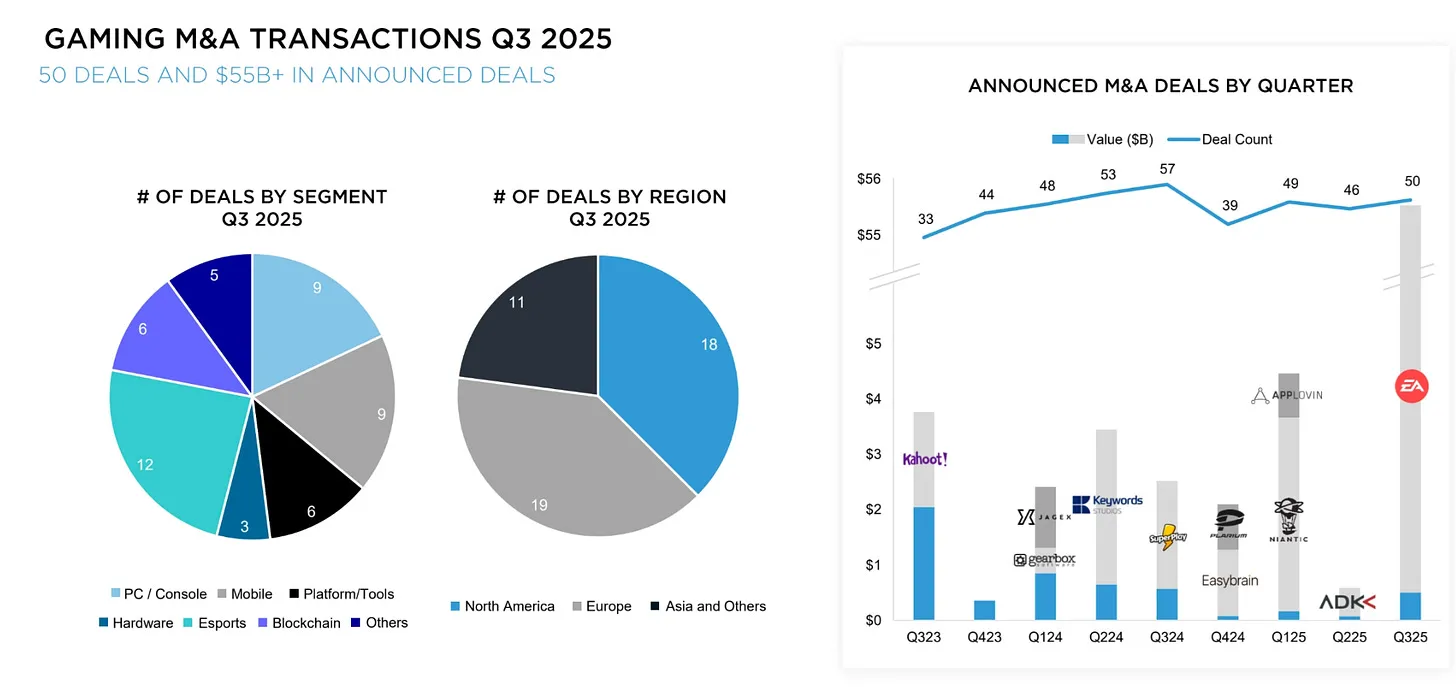

Q3 brings a landmark spike in gaming M&A

Drake Star’s Q3 2025 report revealed record M&A activity led by the largest leveraged buyout in gaming history: a Saudi PIF, Silver Lake, and Affinity Partners consortium acquired Electronic Arts for $55 billion. Overall gaming M&A reached 50 deals, the highest in four quarters. Private financing rebounded for the first time in six quarters with 115 rounds, led by Lingokids ($120M) and Good Job Games ($60M). Western public gaming equities surged, with the Drake Star Western Gaming Index rising 31.3% year-to-date, led by Roblox (+136.9%), Unity (+77.9%), and Everplay (+75.0%). Drake Star expects AI and tools sectors to remain hot, with PE momentum showing no signs of slowing.

Investments

AI companies in gaming and developer tooling captured significant investor attention in Q2 and Q3 2025. Notable funding rounds during this period include:

The Latest Research

lmgame-Bench: How Good are LLMs at Playing Games? introduces a benchmark suite for evaluating LLMs across platformer, puzzle, and narrative games through a unified API. Results showed RL training on a single game transferred to unseen games and planning tasks, though LLMs still struggle with vision perception and prompt sensitivity in complex environments.

Agents Play Thousands of 3D Video Games presents PORTAL, a framework where AI agents learn to play thousands of 3D video games using language-guided, embodied interaction. Agents demonstrate generalizable skills and quick learning across games, though bottlenecks remain in environments requiring social collaboration and emergent complex tasks.

AXIOM: Learning to Play Games in Minutes achieves seven times faster learning than leading DeepMind models by focusing on object-centric modeling rather than pixel memorization. It outperforms larger models with just 0.95M parameters versus 420M, showing high adaptability in the Gameworld 10k benchmark. Limitations appear in games demanding intricate narrative logic or hidden information.

Narrative Co-Creation frameworks explore how AI models collaborate with humans to co-create gaming narratives, with new assessment methods for creativity, quality, and engagement of AI-generated stories. Multiple papers introduce benchmarking paradigms for strategic dialogue, interactive reasoning, and embodied gameplay.

General Game Playing advances demonstrate new agent frameworks playing wide ranges of games using code-based world modeling, highlighting progress in generalization beyond fixed rule-based systems. There’s a push to leverage RL with LLMs, combining declarative and procedural knowledge for improved reasoning in multi-step, interactive challenges.

Playstyle and Artificial Intelligence examines how AI can model, adapt to, and shape player playstyles by analyzing in-game behaviors. The framework proposes adaptive AI that tailors difficulty and narrative to individual preferences, though accurately capturing nuanced player intent and ensuring user satisfaction remain challenges.

Impressive progress, but it also reveals a critical bottleneck: LLMs excel at language but struggle with spatial reasoning and physical interaction. This limitation is driving the industry’s pivot to an entirely different paradigm.

The Hottest Trends and Tools

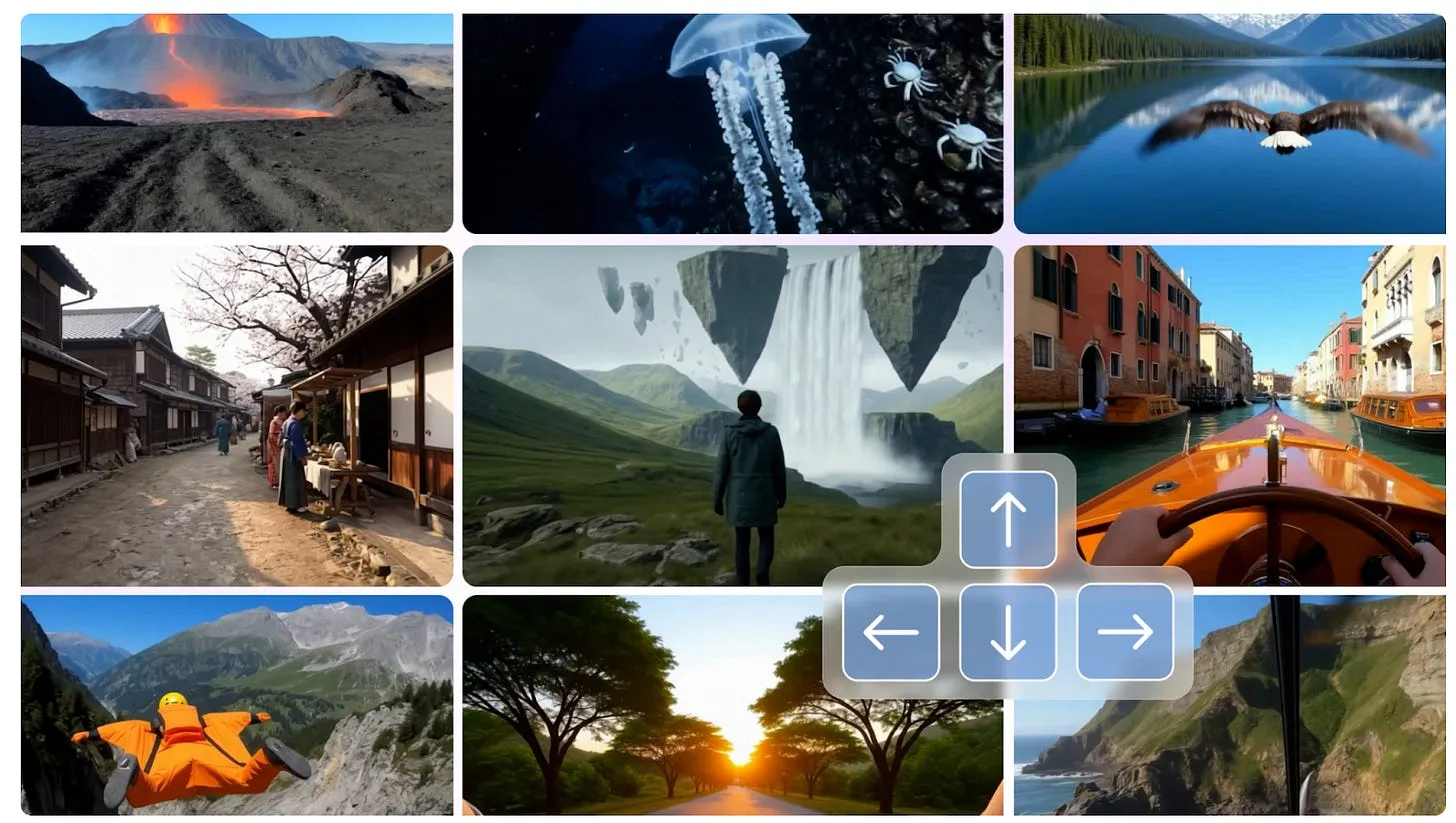

World models: From prediction to spatial understanding

some researchers believe the real breakthrough won’t come from better language models. It’ll come from AI that learns by watching, not reading.

World models represent a fundamental shift from predicting the next word to understanding physical space. And here’s the interesting part: unlike the LLM race where OpenAI and Anthropic are miles ahead, world models are young enough that scrappy, well-funded startups actually have a shot.

The tech is moving fast. Google DeepMind’s Genie 3 (August 2025) can generate entire 3D worlds from text prompts at 24 FPS in 720p, keeping everything visually consistent for up to a minute with realistic physics.

Meta’s V-JEPA 2 (June 2025) showed something remarkable. After watching 1M+ hours of video and just 62 hours of actual robot footage, it could handle new manipulation tasks with 65-80% success rates and run 30x faster than NVIDIA’s Cosmos. Tencent’s Yan took things further with infinite simulation length and the ability to turn any image into an interactive video.

The efficiency gains are getting crazy. Lucid v1 figured out how to compress each video frame down to just 15 tokens instead of 500+. That’s a 600x reduction. Real-time AI-generated worlds running at 25 FPS on a consumer gaming GPU, no datacenter required.

Other developments include Wayve’s GAIA-2 for autonomous driving and NVIDIA’s Cosmos, which has been downloaded over 2M times since January 2025 and deployed by Uber for AV data curation, Amazon for manufacturing robotics, and Boston Dynamics for simulation.

The technology has attracted significant capital. World Labs raised $230M at a $1B+ valuation, while Decart reached a $3.1B valuation, demonstrating real-time world generation with models like Oasis.

xAI, Elon Musk’s AI venture, is also entering the space by hiring Nvidia experts like Zeeshan Patel and Ethan He to accelerate development of advanced world models capable of designing and navigating physical spaces.

This aligns with Musk’s announcement that xAI’s game studio plans to release a “great AI-generated game” before the end of 2026, potentially leveraging these models for dynamic, AI-driven video game generation.

Local LLMs moving from experiment to production

World models promise the future, but they require massive compute and cloud infrastructure. Meanwhile, a quieter revolution is happening on-device: local LLMs are already solving practical problems for developers today, no datacenter required.

Take Echoes of Ir, which showcased companions powered by local LLMs, complete with memory-like behavior and betrayal mechanics, running entirely on-device without any cloud latency.

Or Verbal Verdict, which shipped on Steam with a local LLM stack driving its interrogation-based gameplay. It’s one of the first commercial titles to prove that real-time, on-device dialogue works in production. Then there’s Adam Lyttle, who built a music learning app using Apple Intelligence that handles real-time note monitoring, adjusts difficulty on the fly, and announces progress, all running locally on the device.

Behind the scenes, developers are increasingly turning to tools like Ollama and LocalAI to integrate models such as Gemma and Llama into Unity and Godot. The result? Offline NPC systems that work without an internet connection. At GDC 2025, sessions on co-creative storytelling highlighted these architectures. The hardware barrier is falling fast. Smaller 8B models now run smoothly on consumer GPUs with just 12-24GB of VRAM.

The next frontier is voice. NeuTTS Air, released in October 2025, brings production-ready text-to-speech to the edge with its 748-million-parameter model running in just 400-600MB of RAM.

Built on a Qwen 0.5B backbone and compressed to around 500MB in quantized format, it achieves real-time synthesis on mid-range CPUs. No GPU required. The model clones voices from 3-15 seconds of reference audio with 200ms latency and 85-95% speaker similarity, making dynamic voiceover for procedural dialogue or adaptive tutorials suddenly viable offline. Open-sourced under Apache 2.0, it’s already being deployed on devices ranging from laptops to Raspberry Pi boards.

I think this shift to edge AI addresses three problems cloud-based solutions simply can’t solve. First, there’s zero latency for real-time interactions like NPC dialogue or adaptive difficulty. Second, there are no recurring API costs that balloon with your player count. And third, you get complete data privacy by design.

As a result, novel interaction patterns are emerging. Vibe-coded games where AI responds instantaneously to player whims, or reinforcement learning-enhanced NPCs that evolve without network delays. That kind of thing wasn’t really possible before.

From runtime to production: AI transforming asset creation

Tools like Tencent’s Hunyuan-Game are transforming how games are built. Launched in June 2025, it renders sketches in roughly one second and three-view diagrams in minutes instead of 12+ hours. Production speeds are up around 300%.

Trained on millions of gaming datasets, it handles mobile environments, characters, and UI with impressive precision. Real-time sketching instantly visualizes ideas. Automated character views give you 360° previews ready for rigging. The workflow goes straight from inspiration to design. By September 2025, version 2.0 added image-to-video generation, custom LoRA training for style consistency, and one-click refinement tools.

Hunyuan3D Studio goes further: text-to-3D generation, one-minute UV unwrapping, PBR editing, and auto-rigging. What used to take days now takes minutes.

The open-sourced Hunyuan-GameCraft is where it gets really interesting. It creates interactive video from single scenes with user-controlled camera movements and environmental effects like rain or clouds, all running on an RTX 4090.

With photorealistic textures and direct exports to Unity, Unreal, and Blender, high-quality asset creation is actually accessible now.

Vibe coding proves itself in unexpected places

The NHS Clinical Game Jam in September 2025 showed just how transformative these tools can be beyond traditional game studios. Healthcare professionals with zero game development experience used Rosebud’s AI tools to prototype playable games in a single weekend, including a winning mental health garden game that teaches emotional regulation through play.

These clinicians went from concept to prototype without touching traditional code. They focused entirely on design and therapeutic purpose instead of wrestling with toolchains.

Gambo.ai takes a similar approach. Users can build complete, playable games from a single text prompt in minutes. It automatically generates everything: characters, maps, animations, music, sound effects, even built-in ads for day one monetization.

This no-code workflow eliminates the usual barriers like scripting or manual asset organization. Creators focus on ideas rather than technical execution. Some users have recreated classics like Doodle Jump or built complex demos inspired by games like Silksong in hours or days, with AI handling everything from 2D/3D visuals to interactive elements.

I think this is a glimpse of where creation is headed. Not just faster workflows for existing developers, but genuinely new creators entering the space who would’ve been locked out before. The barrier to entry is collapsing.

Conclusion

AI in game development is past the “wait and see” phase. Studios experimenting now are finding real uses. Those sitting on the sidelines are starting to fall behind. Mobile leads adoption because players care less about AI usage and optimization matters more. PC and console developers have to be more careful. Their audiences are way more sensitive to AI. Some of the hype is overblown. But the technology solves real problems, especially around exploding budgets and production risk.

Small teams can suddenly punch above their weight. The 8,000+ games on Steam already using AI show it’s working when it’s used to help creators, not replace them. Meanwhile, bigger publishers often struggle with internal politics around adoption. That might actually give indies an edge.

Like every tech wave before, AI will unlock entirely new types of games and experiences we haven’t imagined yet. World models and related tech will fundamentally change how we think about building and distributing games altogether.

Every studio should be running small experiments now. Prototype something. Build internal expertise. Figure out what works for your audience. The biggest opportunities are the ones we can’t quite see yet. You want to be ready when they come into focus.

Disclaimers:

This is not an offering. This is not financial advice. Always do your own research. This is not a recommendation to invest in any asset or security.

Past performance is not a guarantee of future performance. Investing in digital assets is risky and you have the potential to lose all of your investment.

Our discussion may include predictions, estimates or other information that might be considered forward-looking. While these forward-looking statements represent our current judgment on what the future holds, they are subject to risks and uncertainties that could cause actual results to differ materially. You are cautioned not to place undue reliance on these forward-looking statements, which reflect our opinions only as of the date of this presentation. Please keep in mind that we are not obligating ourselves to revise or publicly release the results of any revision to these forward-looking statements in light of new information or future events.

October 15, 2025

Share