How Agents Are Changing the Rules of Business

The first wave of AI was about creation: writing blog posts, generating images, drafting emails. Now we're witnessing something fundamentally different: AI that doesn't just create, it acts. These aren't content generators anymore; they're autonomous agents completing real tasks and achieving actual objectives.

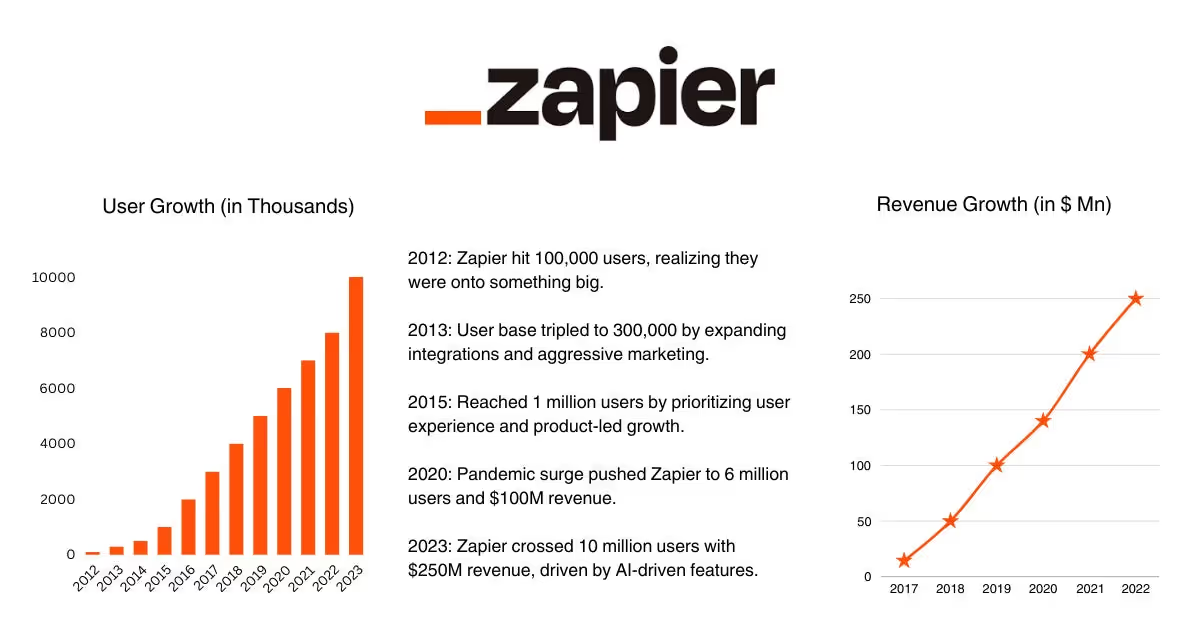

AI tasks exploded 760% in two years on Zapier's platform alone, the fastest growth they've ever recorded. Over 200 million AI tasks were completed each month across 8,000+ connected apps. But this isn't just impressive growth. It signals a fundamental shift in how work gets done.

Despite these powerful capabilities, most firms continue to operate AI in silos. Marketing teams prompt Claude, sales teams analyze calls with Gong, and support uses ChatGPT. While each tool provides unique value, this dispersed approach leaves workflows unconnected and potentially unmet. The real breakthrough happens when organizations stop treating AI as scattered tools and start building integrated agent ecosystems.

When DoorDash deployed its first AI agents, they scaled from zero to 35,000+ automated calls in six weeks with 94% accuracy. According to IBM, 99% of enterprise developers create AI agents rather than AI tools. What's the difference? These are not just simple content creators. Instead, they are autonomous task executors. Previously, AI tools required constant human oversight. Modern agents work independently.

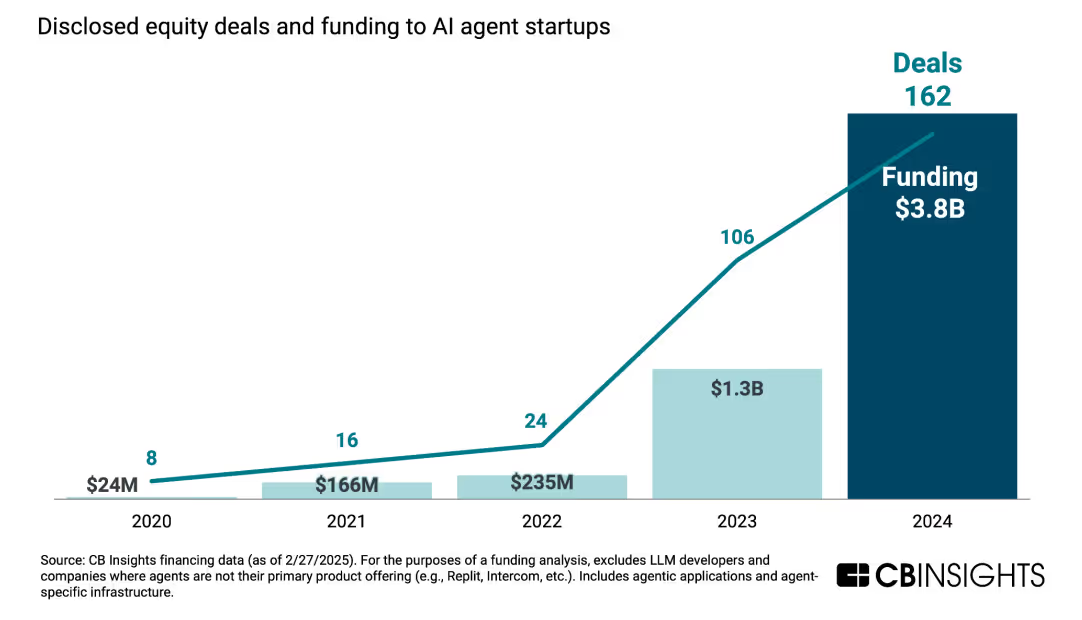

The momentum for this transition is evident. AI agent companies raised $3.8 billion in 2024, nearly double the figure in 2023, as every major technology company began creating agent platforms and tooling. Workforce composition will shift toward hybrid human-AI teams, and operational efficiency will skyrocket as mundane jobs are entirely automated.

Here's why it's happening now, how the best companies are capturing value, and what it implies for the future of work.

The Technical Leap

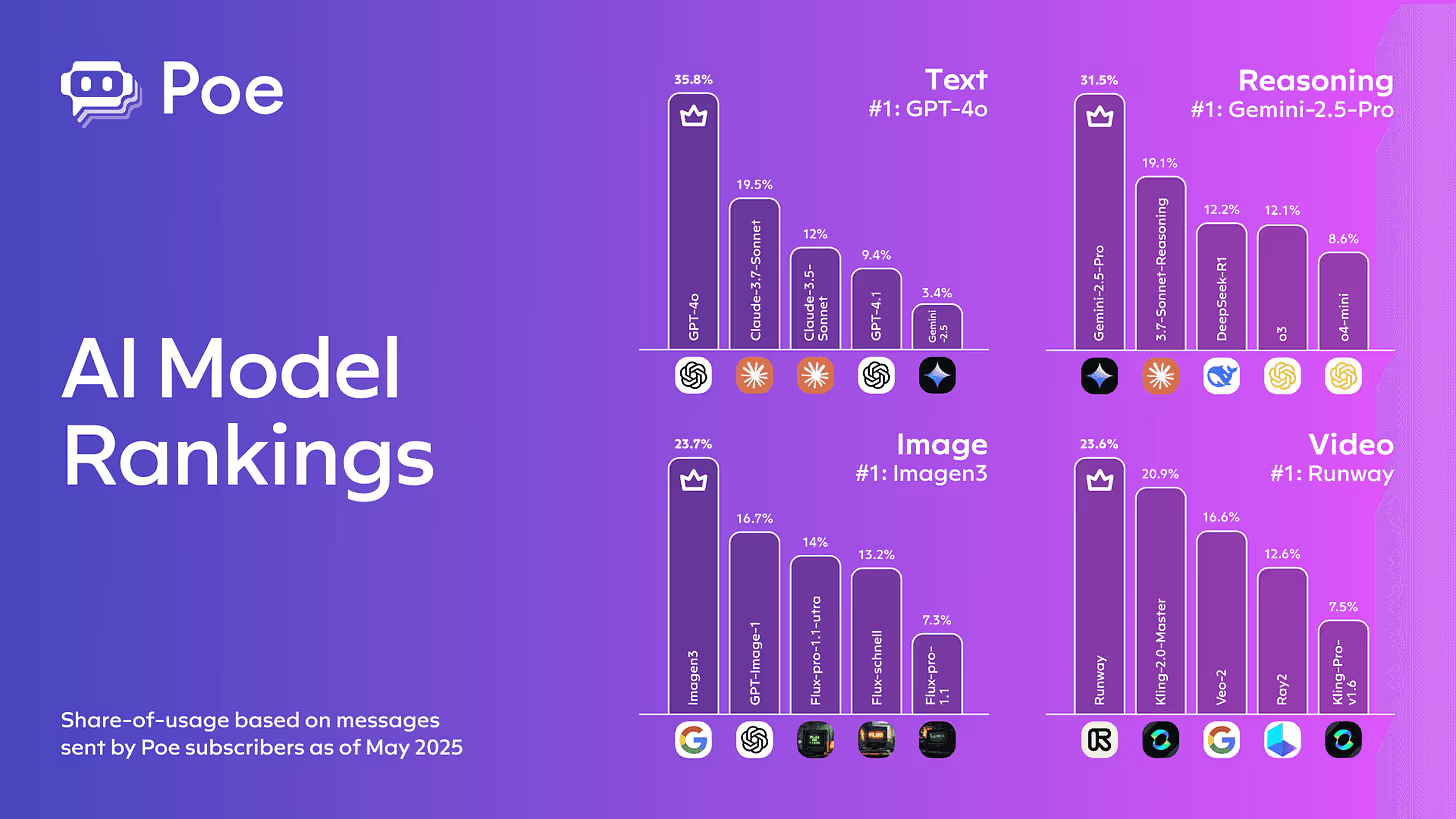

stems from advances in reasoning models. AI that can think through problems step by step. Poe's data tells the story: reasoning model usage jumped from 2% to 10% of all messages in early 2025.

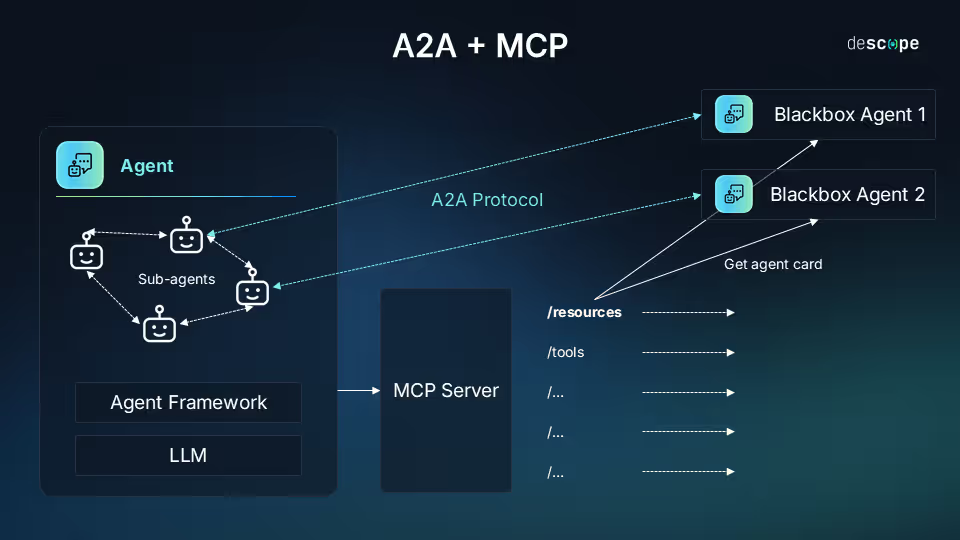

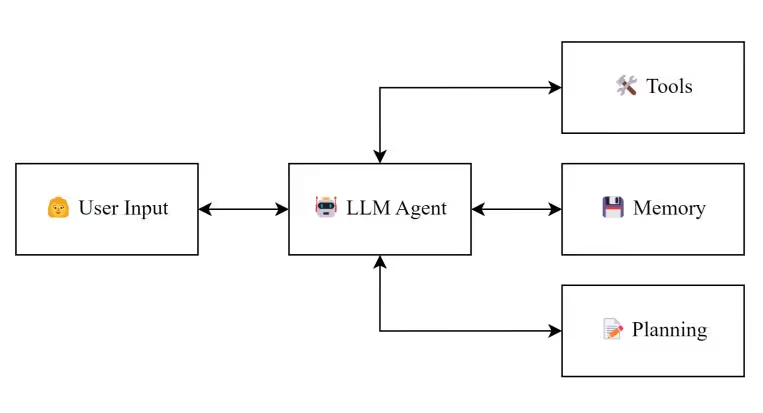

Reasoning models can plan, execute, and adjust in real time, a fundamental shift from previous AI that relied on pattern matching. This capability leap required new infrastructure.

Three foundational protocols emerged: Anthropic's MCP connects agents to tools and data sources, Google's A2A enables agent-to-agent collaboration, and AGUI facilitates user interface interactions. Rather than competing, these protocols complement each other, with Microsoft's Azure AI Foundry Agent Service now unifying these standards with built-in observability.

The Emerging Ecosystem

This technical foundation unlocked an entirely new category of business software. Unlike rigid automation that breaks when interfaces change, AI agents use reasoning and context to navigate PDFs, spreadsheets, and legacy systems without pre-programmed pathways.

The result? Specialized agent platforms emerged across every business function, driving sector value from $5.4 billion to $7.6 billion in just one year. However, the deeper trend is mass democratization; domain experts can now build complex agents through no-code platforms, and no developers are required.

.avif)

The enterprise numbers prove this isn't just hype. Salesforce reported over 200 Agentforce deals weekly, with thousands in the pipeline. Microsoft's Copilot platform enables low-code agent creation, removing technical barriers that previously limited AI deployment to engineering teams.

The Brains Behind the Operation

But here's what makes this transformation possible: a new generation of LLMs that don't just generate text. They reason, plan, and orchestrate entire workflows. These "agentic" models work like a conductor leading an orchestra, with the LLM as the brain coordinating a whole toolkit of specialized components.

The leaders in this space each bring something unique to the table:

Anthropic's Claude 4 (May 2025) dominates code with its Opus 4 variant hitting 72.5% on SWE-bench. It can work through multi-hour sessions using external tools via Claude Code and the Modular Context Protocol (MCP). At the same time, the Sonnet 4 variant offers strong reasoning and memory at lower cost, helping push Anthropic to $2B in annualized revenue by Q1 2025.

OpenAI's GPT-4o launched with an Agents SDK and Responses API, enabling multi-step task completion through its "Operator" agent that can navigate websites and complete transactions. Google's Gemini powers Agentspace, an enterprise platform built around the Agent2Agent (A2A) protocol for multi-agent coordination across tools and workflows.

Manus AI, launched in early 2025, achieved 86% task success on the GAIA benchmark, surpassing many peers. It specializes in autonomous multi-agent execution for domains like travel planning, web design, and finance.

Mistral AI joined this elite group in May 2025 with their Agents API, taking a different approach focused on enterprise orchestration. Instead of emphasizing individual agent capabilities, Mistral built a framework for multi-agent coordination. Their system handles agent handoffs seamlessly, and a finance agent can delegate to a web search agent or calculator agent mid-conversation, creating collaborative workflows that tackle complex problems piece by piece.

The technical foundation is impressive: built-in connectors for code execution, web search, image generation, MCP tools, and persistent memory across conversations. On the SimpleQA benchmark, Mistral Large with web search jumps from 23% to 75% accuracy, proving that connected agents dramatically outperform isolated ones.

We're witnessing the emergence of an "agent economy" where AI systems don't just respond to prompts but independently execute complex, multi-step tasks that deliver real-world results.

Software Development

Nowhere is this agent revolution more visible than in software development. The coding industry is experiencing its most significant disruption since the Internet, and the numbers are staggering.

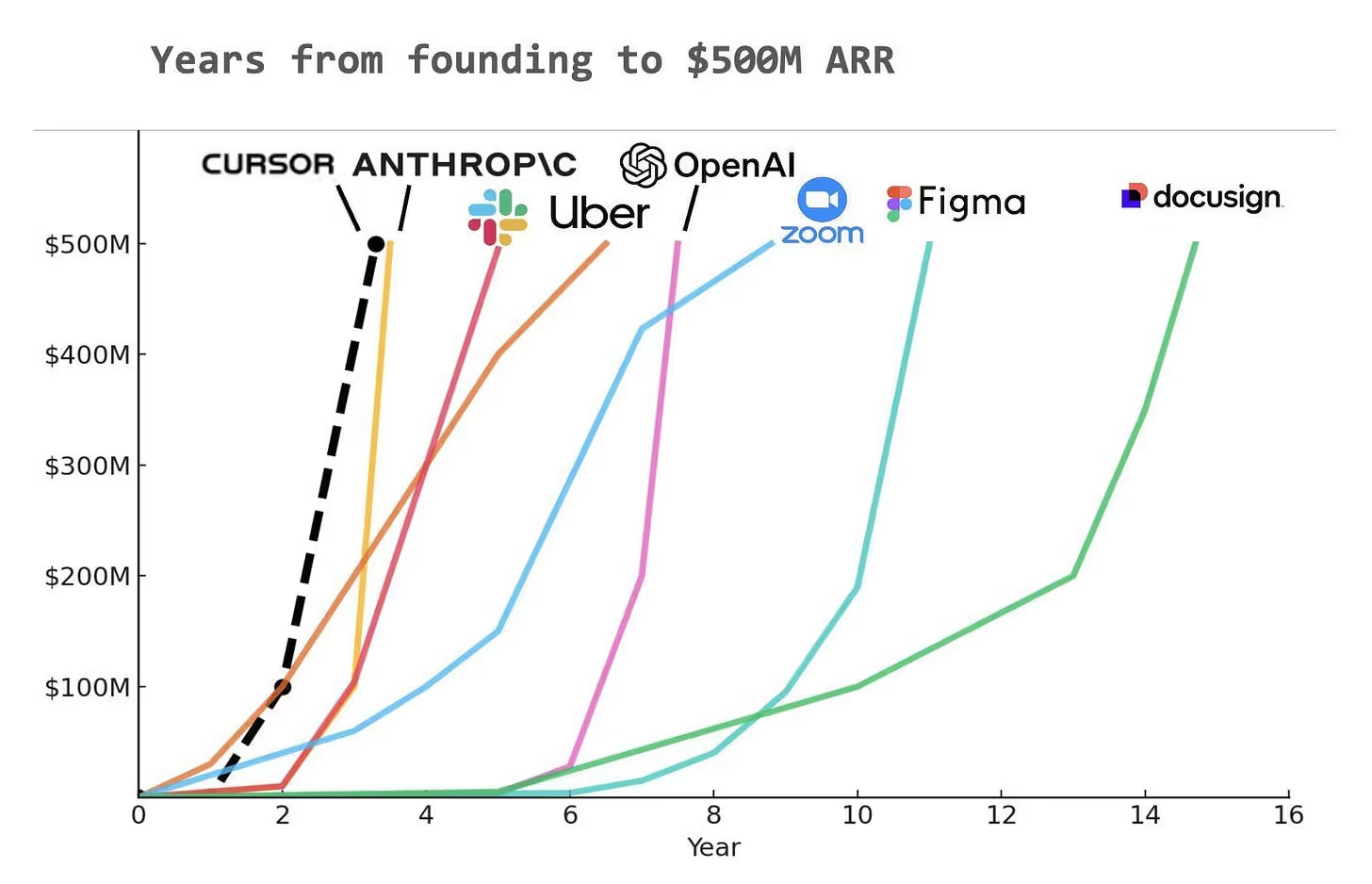

Take Anysphere, creator of Cursor (an AI-powered VSCode fork). They raised $900M at a $9B valuation, jumping from $2.5B just months earlier. Their AI editor generates nearly a billion lines of code daily and ranks among the fastest-growing software startups with $500M ARR and over a million daily users.

Tech giants are racing to integrate AI agents into entire product suites. Microsoft 365 Copilot, Salesforce Einstein, Adobe's generative features. Users now expect applications to have intelligent assistants, making AI capabilities a competitive necessity rather than a nice-to-have.

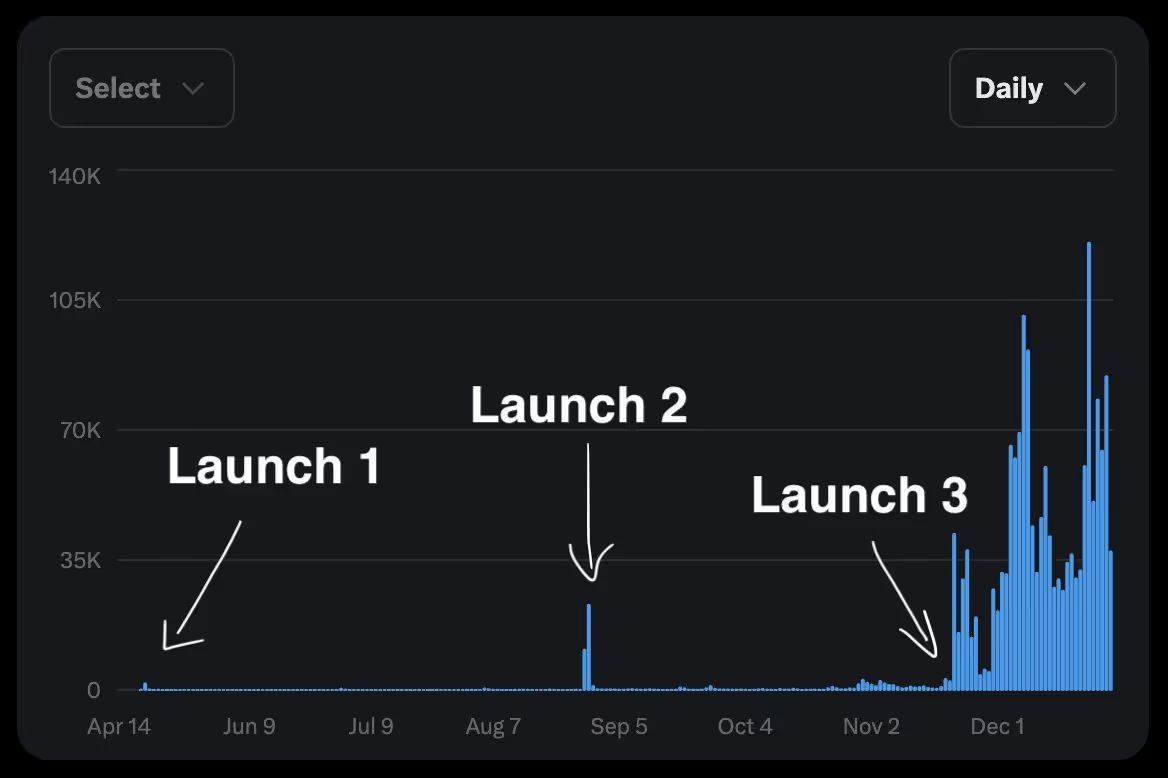

This reflects broader momentum: AI coding tool investment doubled from $420M (2023) to $780M (2024), and speed records continue falling. Swedish startup Lovable hit $17M ARR in just three months with 15 employees. Behind this overnight success lies 18 months of iteration.

Founder Anton's GPT Engineer gained 50,000 GitHub stars but struggled to monetize. After multiple pivots, they rebranded to Lovable in November 2024 with a breakthrough: solving the AI problem of getting "stuck" on large codebases.

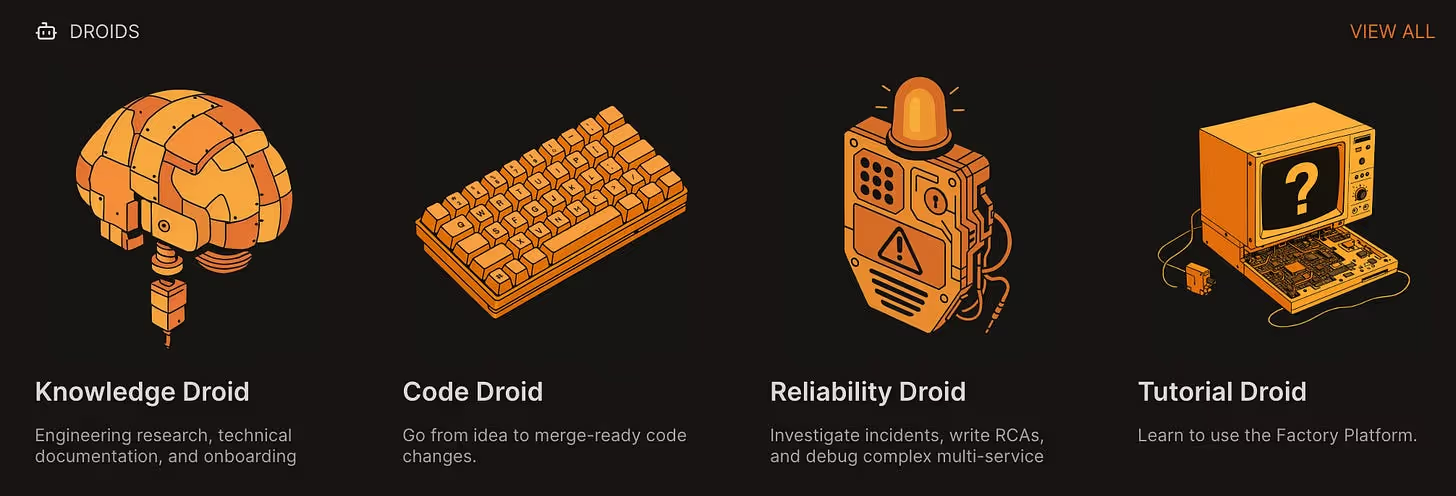

The enterprise angle is equally compelling. Factory.AI emerged from stealth after quietly serving enterprise clients for two years. Their specialized AI "droids" handle complete development workflows, letting human teams focus on architecture and product strategy instead of grinding through tickets.

CEO Matan Grinberg envisions a near future where AI agents meet developers everywhere. Imagine tagging droids in Slack to turn brainstorming sessions into working code or watching backlogged tickets automatically become production-ready pull requests. Development becomes less about coding and more about understanding, planning, and testing.

The competition is getting cutthroat. Anthropic recently cut Windsurf's Claude access amid rumors that OpenAI wants to acquire the coding assistant for $3B. "It would be odd for us to be selling Claude to OpenAI," Anthropic's Chief Science Officer noted dryly, highlighting just how strategic these tools have become.

The stakes justify the aggression. Anthropic CEO Dario Amodei predicts AI will write 90% of code within 3-6 months, practically all code within a year. He envisions individuals running billion-dollar businesses solo by 2026, using AI for work that previously required massive teams. His warning about 50% job losses in entry-level white-collar work and 20% U.S. unemployment by 2030 might sound extreme, but the current pace suggests his timeline isn't far-fetched.

The Infrastructure Powering It All

While coding assistants grab headlines, another battle happens in the frameworks and toolkits layer.

LangChain dominates this space with its modular approach to building AI applications. As of February 2025, its developer platform, LangSmith, has surpassed 250,000 user sign-ups, processed over 1 billion trace logs, and supports around 25,000 monthly active teams. The company was valued at $200 million following Sequoia’s $25 million Series A.

Salesforce's AgentForce proves the enterprise appetite is massive. The platform automates 84% of support tickets, closes over 1,000 paid deals, and delivers up to 40% cost reductions. With 380,000 internal inquiries resolved, it shows the scalability enterprises demand.

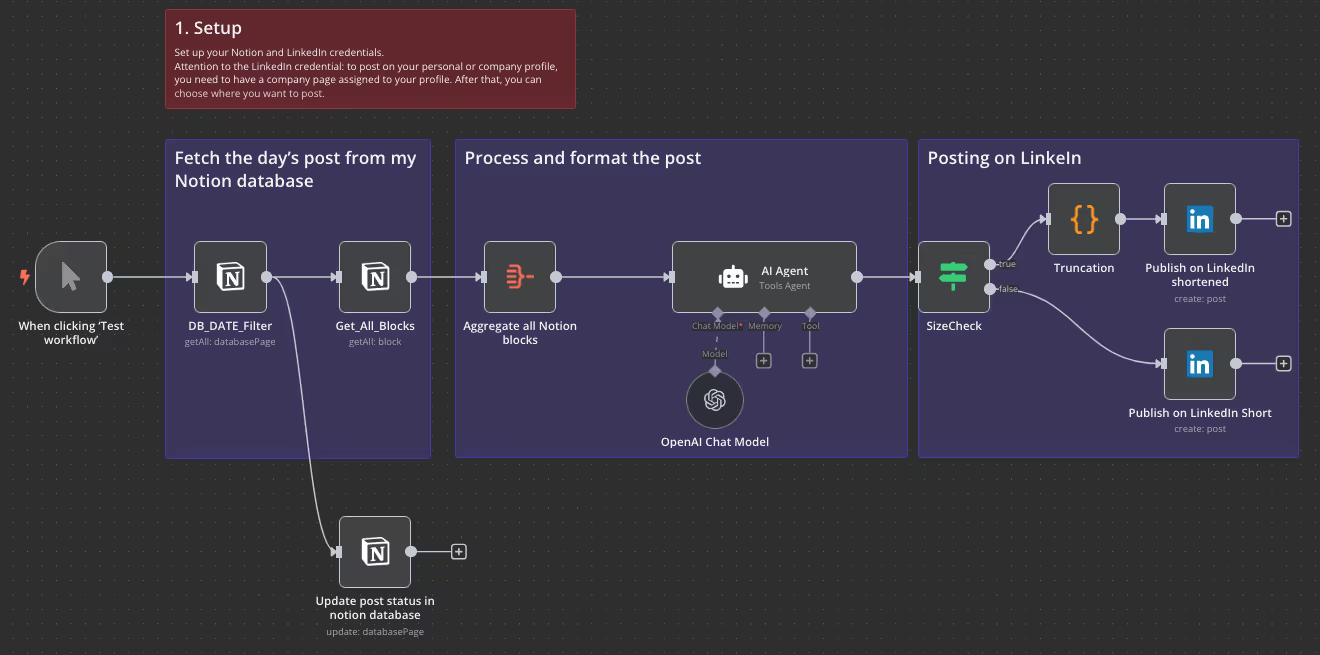

n8n exemplifies the no-code revolution in AI workflows. After pivoting to AI-powered automation in 2022, the Berlin-based platform saw 5X revenue growth, doubling in the last two months. Their recent $60M raise at a $270M valuation reflects investor confidence in democratizing AI development. "It's a prompt to build workflow," explains founder Jan Oberhauser.

Users can write "get information from X and send it to Y" in natural language instead of coding 50 lines for email integration. With 75% of their 3,000+ enterprise customers now using AI tools, n8n proves the infrastructure layer is where the fundamental transformation happens.

E2B tackles the elephant in the room: safety. Their $11.5M seed round funds open-source sandboxes that let AI-generated code run securely. Processing millions of instances monthly, they're becoming the essential safety layer that makes everything else possible.

When AI Agents Come to Play

Here's where things get interesting: gaming has become the ultimate testing ground for AI agents. Virtual worlds provide the perfect sandbox for testing how AI agents behave, cooperate, and evolve when given complete freedom to act. The gaming industry is witnessing a fundamental shift as AI agents evolve from simple NPCs to sophisticated autonomous entities.

Altera made waves by securing $11.1M after demonstrating agents forming complex societies in Minecraft, complete with politics, trade relationships, and cultural evolution. Now they've revealed their flagship product, Fairies, a general-purpose agent capable of executing over 1,000 actions across popular applications, from code generation to task parallelization.

Meanwhile, Nunu.ai has raised $6M to support their vision of unembodied agents that seamlessly adapt across different gaming environments.

Their practical impact is already evident: Roboto Games deployed Nunu to automate QA testing for Stormforge, achieving a 50% cost reduction while reclaiming 160 hours monthly through 400+ automated tests generated via natural language commands. The five-hour integration now delivers daily testing coverage spanning performance, combat mechanics, and UI validation, complete with video playback and instant Slack notifications.

DeepMind's SIMA agent demonstrates visual-based gameplay in titles like GTA V, responding to natural language commands through controller emulation.

Similarly, Stanford's Generative Agents showcased 25 autonomous NPCs orchestrating coordinated events while pursuing individual goals within a sandbox environment.

These developments signal gaming's evolution from static, predetermined systems to dynamic, intelligent simulations that fundamentally reshape both game design and AI-human interaction.

The Sound of Intelligence

Voice is becoming the dominant way we interact with AI. After all, talking feels more natural than typing, especially when you're asking an AI to book your flight, debug your code, or manage your calendar.

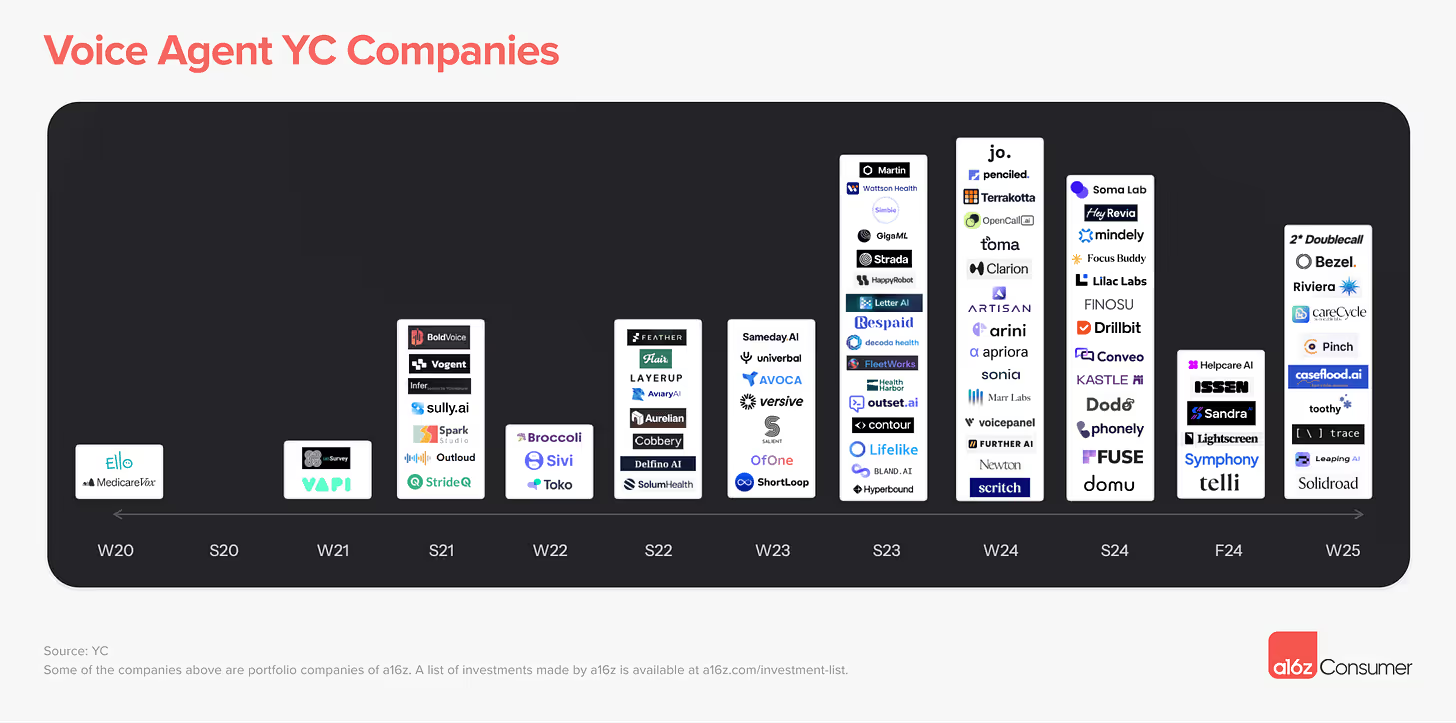

Voice has emerged as the primary interface for AI agents, with several companies driving innovation in this rapidly evolving space. OpenAI's Realtime API launch in 2024 catalyzed speech-to-speech application development across multiple use cases, and voice AI companies now comprise a larger share of Y Combinator's recent cohorts than ever before.

Deepgram’s new enterprise-grade speech-to-speech architecture marks a key milestone in voice AI, enabling real-time, emotionally rich interactions without converting speech to text. This end-to-end design reduces latency and preserves nuance, crucial for enterprise use. With built-in debugging tools and efficient training via transfer learning, the platform lowers infrastructure costs at scale. As 84% of organizations increase budgets for voice agents, Deepgram stands out as a foundational voice AI provider.

ElevenLabs on the other hand, continues to lead in text-to-speech synthesis. Its latest v3 model introduces rich emotional control via inline audio tags like [excited], [whispers], and [sighs], making it ideal for media production, audiobooks, and character-driven content.

However, latency constraints make it better suited for asynchronous rather than real-time use cases.

Platforms like VAPI offer a comprehensive developer stack for real-time voice agents, handling voice recognition, synthesis, and conversation orchestration. For instance, Fleetworks leveraged VAPI to enable over 10,000 AI-driven calls per day, streamlining operations and slashing engineering overhead in the transportation sector.

Together, these advancements mark a shift toward fully integrated, immersive, and emotionally intelligent voice agents, enabling companies to deploy naturalistic voice experiences without building complex infrastructure from scratch.

From Voice to Action: Agents Take the Wheel

But voice is just the beginning. The real magic happens when AI agents can act on what you tell them, not just in games or through APIs, but directly in the web browser where most of our digital work happens.

Google's Project Mariner operates in the cloud, juggling up to ten tasks simultaneously while learning from user behaviors to automate complex workflows.

OpenAI's Operator goes further, directly interacting with live websites, filling forms, clicking buttons, executing transactions with near-human precision.

Amazon's Nova Act gives developers comprehensive browser automation tools built on Playwright, consistently outperforming competitors in benchmarks. Anthropic's Computer Use, powered by Claude, prioritizes safe browsing experiences while maintaining strong reasoning capabilities, a focus on safety that distinguishes it in an increasingly crowded field.

Scouts tackles a different angle: always-on web monitoring. It watches for specific triggers like news updates, price drops, or reservation availability, delivering timely email alerts based on your criteria.

Think of it as having a tireless assistant constantly refreshing dozens of tabs for you.

Manus represents the current pinnacle, seamlessly combining browsing, reasoning, and tool usage to complete complex, multi-step tasks without human intervention. It's the closest thing we have to a truly autonomous digital assistant.

The Infrastructure Explosion

All these agents need somewhere to store their data, learn from their interactions, and scale their operations. The result? A massive infrastructure buildout that's reshaping the entire data platform landscape.

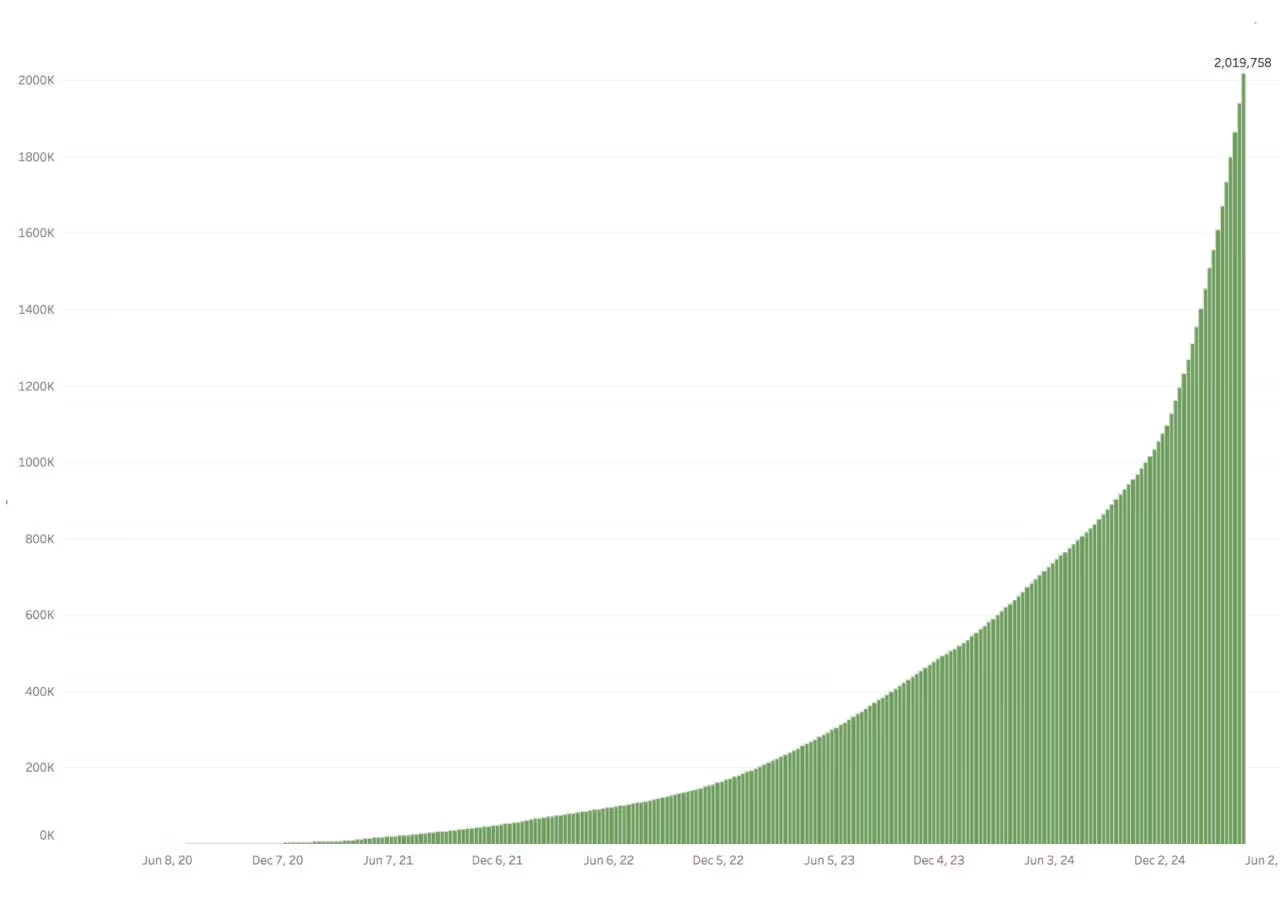

Supabase has transformed from a simple Firebase alternative into the backbone of AI development. Native vector search support enables semantic search, similarity queries, and embedding-based retrieval, essential components for RAG pipelines and agent backends. Supabase reached 2 million developers by June 2025, doubling in just six months as AI-native developers gravitate toward its open-source stack combining authentication, real-time capabilities, and Postgres extensibility.

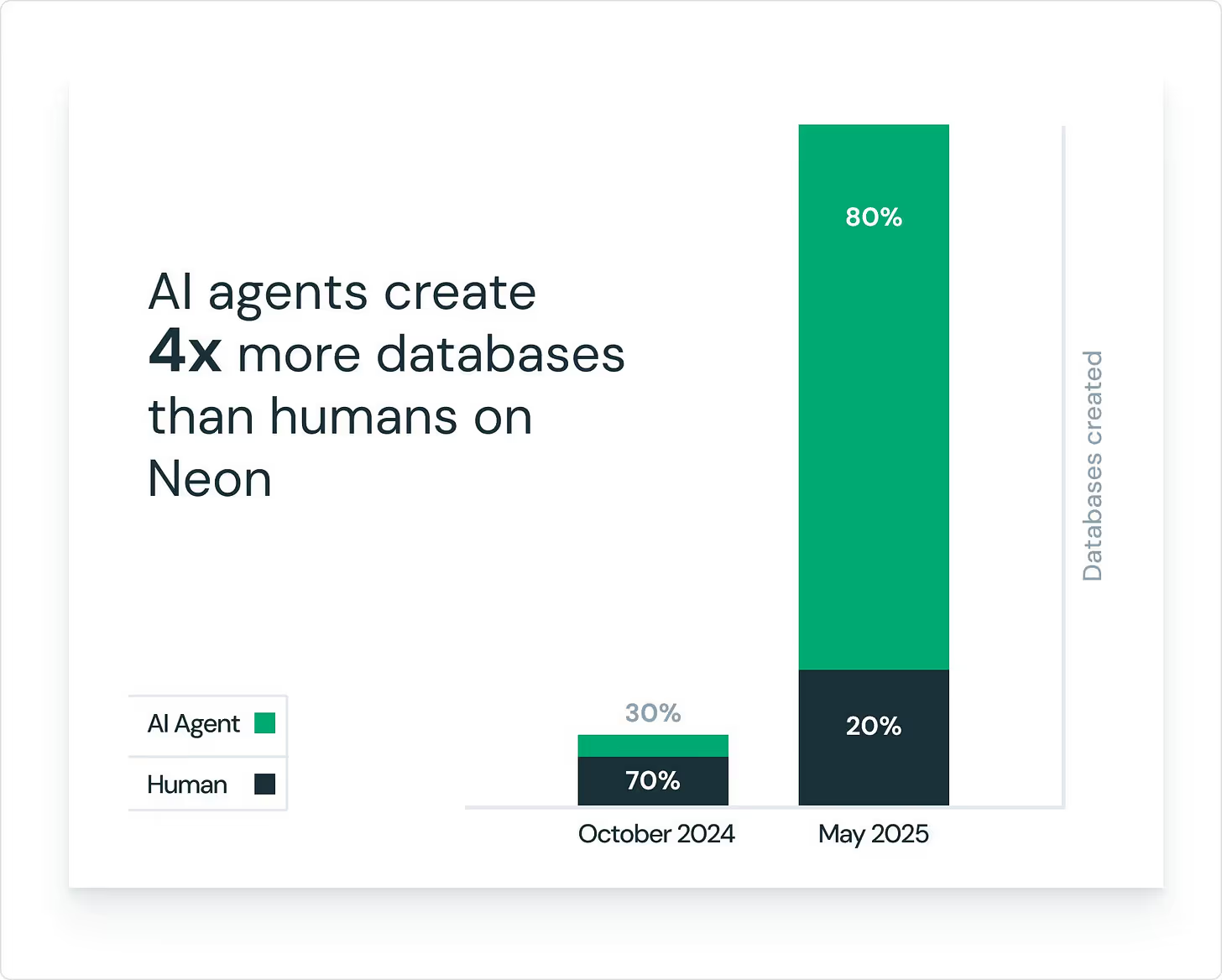

Neon presents an even more dramatic transformation. Three weeks ago, following Databricks' ~$1 billion acquisition, Neon disclosed that 80% of its serverless Postgres databases are now created by AI agents, a stunning reversal from October 2024's 30%. Agents now provision databases at 4× the human rate. Neon's sub-500ms provisioning, auto-scaling, instant branching, and pay-per-use model perfectly align with machine-driven experimentation and ephemeral database lifecycles.

Each platform demonstrates how data infrastructure is being reimagined for agentic workflows, where machines, not humans, are the primary consumers of database services.

The Human Element in an Agent World

With all this tech firepower, Claude 4's coding, Operator's web navigation, it's tempting to focus purely on capabilities. But here's the thing: the real transformation isn't what AI can do, it's how we work with it.

Winners aren't just deploying the latest models. They're rethinking work entirely. Smart companies identify where AI amplifies humans versus where it creates expensive automation nobody wants. They figure out what tasks people want to offload and what work they prefer to keep.

This isn't replacement, it's partnership. The best implementations treat agents as amplifiers, not substitutes. The agent economy is already here, reshaping business. You're either driving this change or watching competitors pull ahead.

Disclaimers:

This is not an offering. This is not financial advice. Always do your own research. This is not a recommendation to invest in any asset or security.

Past performance is not a guarantee of future performance. Investing in digital assets is risky and you have the potential to lose all of your investment.

Our discussion may include predictions, estimates or other information that might be considered forward-looking. While these forward-looking statements represent our current judgment on what the future holds, they are subject to risks and uncertainties that could cause actual results to differ materially. You are cautioned not to place undue reliance on these forward-looking statements, which reflect our opinions only as of the date of this presentation. Please keep in mind that we are not obligating ourselves to revise or publicly release the results of any revision to these forward-looking statements in light of new information or future events.

June 17, 2025

Share